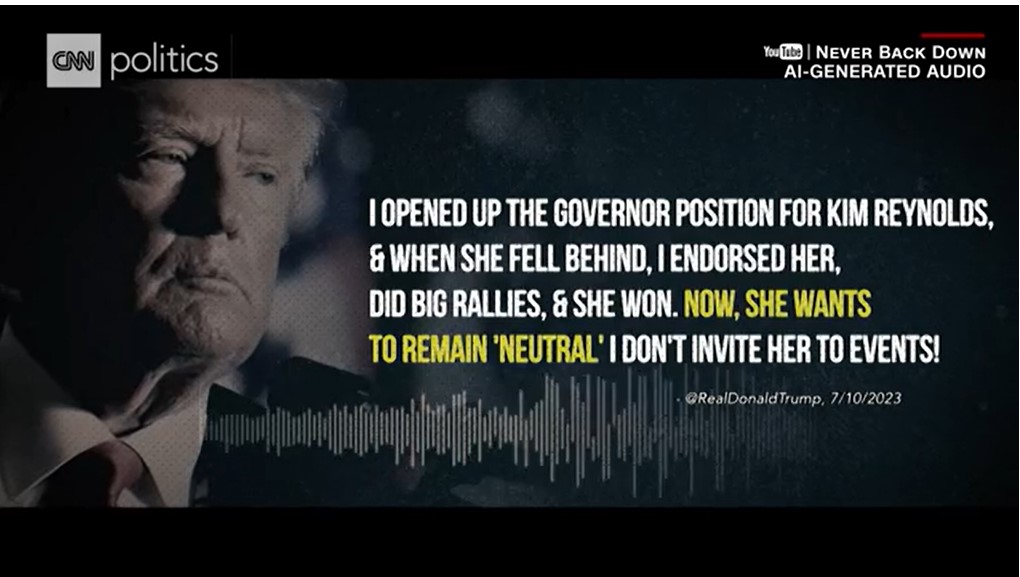

The words were true, the voice was not. That’s what happened when a pro-Ron DeSantis super PAC used an Artificial Intelligence version of Donald Trump’s voice in a new television ad attacking the former president.

This is one time that Trump might accurately cry, “fake news!”

A person familiar with the ad confirmed that while the former president had actually posted those words on his social platform, Truth Social on July 10, he has never actually voiced them. Trump’s voice was AI generated.

The incursion of AI into every aspect of daily life promises to turn this coming election in 2024 into a nightmare for the candidates and the voters alike. As this ad demonstrates, the use of AI-generated content has become increasingly difficult to identify; so-called deepfakes is a new frontier of campaign advertising, one over which we are losing control.

How can candidates prevent their opponents from stealing their likeness and their voice in order to create “fake” narratives? And how can voters determine what is truly a candidate’s message and political stance if AI can invent those for them?

It is becoming increasingly clear that democracy has much to fear from AI. Can we ever again have confidence in a truly authentically honest campaign?

AI can destroy democracy if used to mislead, misinform and confuse voters.

Already Americans believe AI has played a part in eroding the foundations of democracy. Most Americans think AI has had a role in Americans’ loss of trust in elections (57%), in threats to democracy (52%), and in loss of trust in institutions (56%).

AI systems can also be used by governments in democracies with strong traditions of rule of law to abuse their new abilities.

In the DeSantis ad the Florida governor appears to have the former president saying, “I opened up the Governor position for Kim Reynolds. And when she fell behind, I endorsed her. Did big rallies and she won. Now she wants to remain neutral. I don’t invite her to events.”

The immediate objective of the message is to chastise Trump for attacking his own fellow-Republicans instead of turning his ammunition against his Democratic opponents. Looked at in isolation, the message is not that effective or damaging.

The really significant implications all have to do with the manipulation of the technology. It’s yet another political ad in which voters have no idea what’s real and what isn’t.

Donald Trump has already been a frequent target of deep faked images and videos.

“AI is a technology that is here to stay,” said Aubrey Jewett, a political science professor at the University of Central Florida.

He says generative artificial intelligence, or gen AI, is especially effective in political ads because it so easily allows campaigns to distort faces, voices, and messages.

He categorically believes that it should carry disclaimers, saying, “The public deserves to know what is the unvarnished truth of what someone is talking or writing or saying something, versus what has been generated by a computer.”

Legislators like Amy Klobuchar and Corey Booker are so concerned about gen AI distorting political messages that they have introduced a bill called the “Real Political Ads Act,” which would force politicians and PACs to disclose when they use artificial intelligence in commercials.

Experts advise that campaigns can avoid AI deception by recognizing that such systems already do deceive and are likely to continue to deceive. In addition, that social media companies should act conservatively with regard to blocking content; the harms of waiting to confirm the falsity of information before blocking it are often lower than the harms of inadvertently blocking posts by legitimate users conveying accurate information, they say.

But while these precautions might stem the tide, the fear is that even if the public might be able ignore such warnings on the intellectual level, faked messages nevertheless evoke a powerful visceral reaction–a “gut feeling” that will translate into a vote when election day comes.